VIGOR : Visual Goal-In-Context Inference for Unified Humanoid Fall Safety

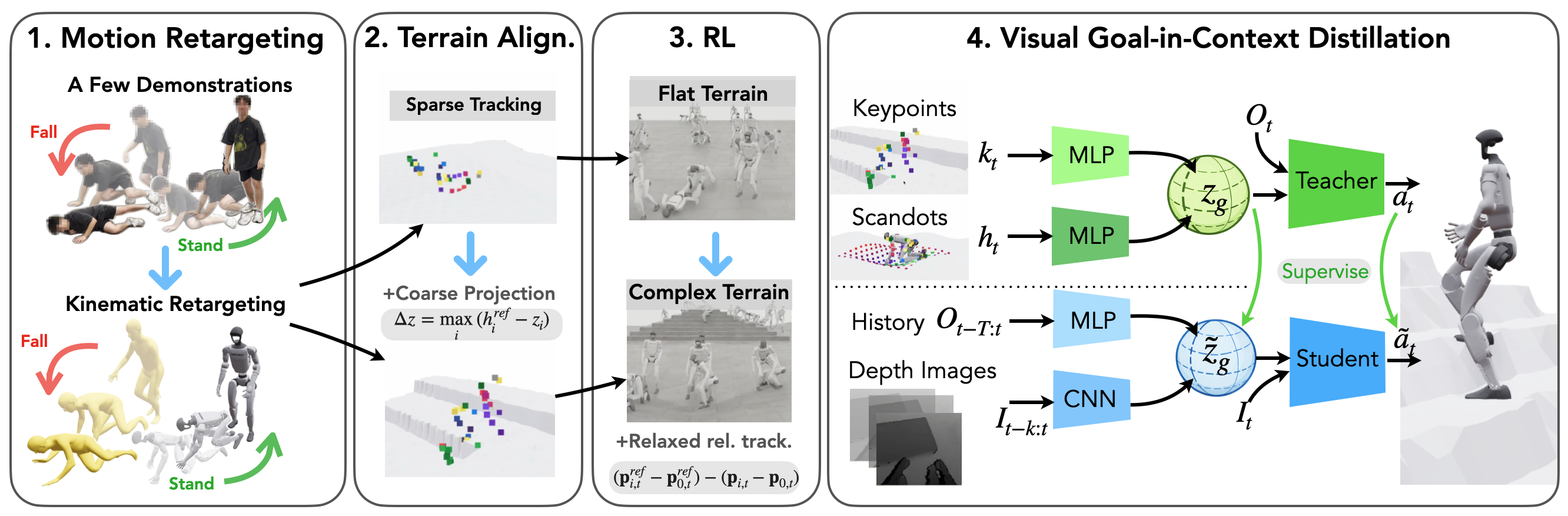

TL;DR: A teacher-student framework that distills terrain-aware recovery goals into a deployable egocentric-depth policy.

TL;DR: A teacher-student framework that distills terrain-aware recovery goals into a deployable egocentric-depth policy.

Reliable fall-recovery is essential for enabling humanoid robots to operate in real, cluttered environments. Unlike quadrupeds and wheeled systems, humanoids experience high-energy impacts, complex whole-body contact, and significant viewpoint changes during a fall, and failure to stand up leaves the robot effectively inoperable. Existing approaches either treat impact mitigation and standing as separate modules or assume flat terrain and blind proprioceptive sensing, limiting robustness and safety in unstructured settings. We introduce a unified framework for fall mitigation and stand-up recovery with perceptual awareness using egocentric vision. Our method employs a privileged teacher that learns recovery strategies conditioned on sparse demonstration priors and local terrain geometry, and a deployable student that infers the teacher’s goal representation from egocentric depth and short-term proprioception via distillation. This enables the student to reason about both how to fall and how to get up while avoiding unsafe contacts. Simulation experiments demonstrate higher success and safer recovery across diverse non-flat terrains and disturbance conditions compared to blind baselines.

We investigate the impact of vision on fall recovery by comparing our visual policy to a blind proprioceptive-only policy. The controller is activated only after the robot tilts 20 degrees, ensuring recovery begins from a true fall. The blind policy exhibits more unsafe behaviors, including increased head and neck contacts and erratic motions.

Unsafe Blind policy

Safe Visual policy (ours)

Our visual fall-recovery policy training pipeline has four key components:

We evaluate our policy on fall mitigation tasks across diverse terrains. We start the robot in a default pose, and engage the policy once the robot has tilted past 20 degrees.

We also evaluate our policy on standing up tasks across many different terrains with diverse initial configurations.

@article{test,

author = {TODO},

title = {TODO},

journal = {TODO},

year = {TODO}

}